Learning ASP.Net MVC series:

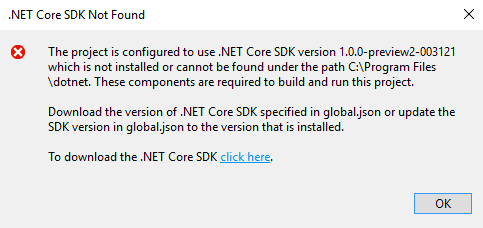

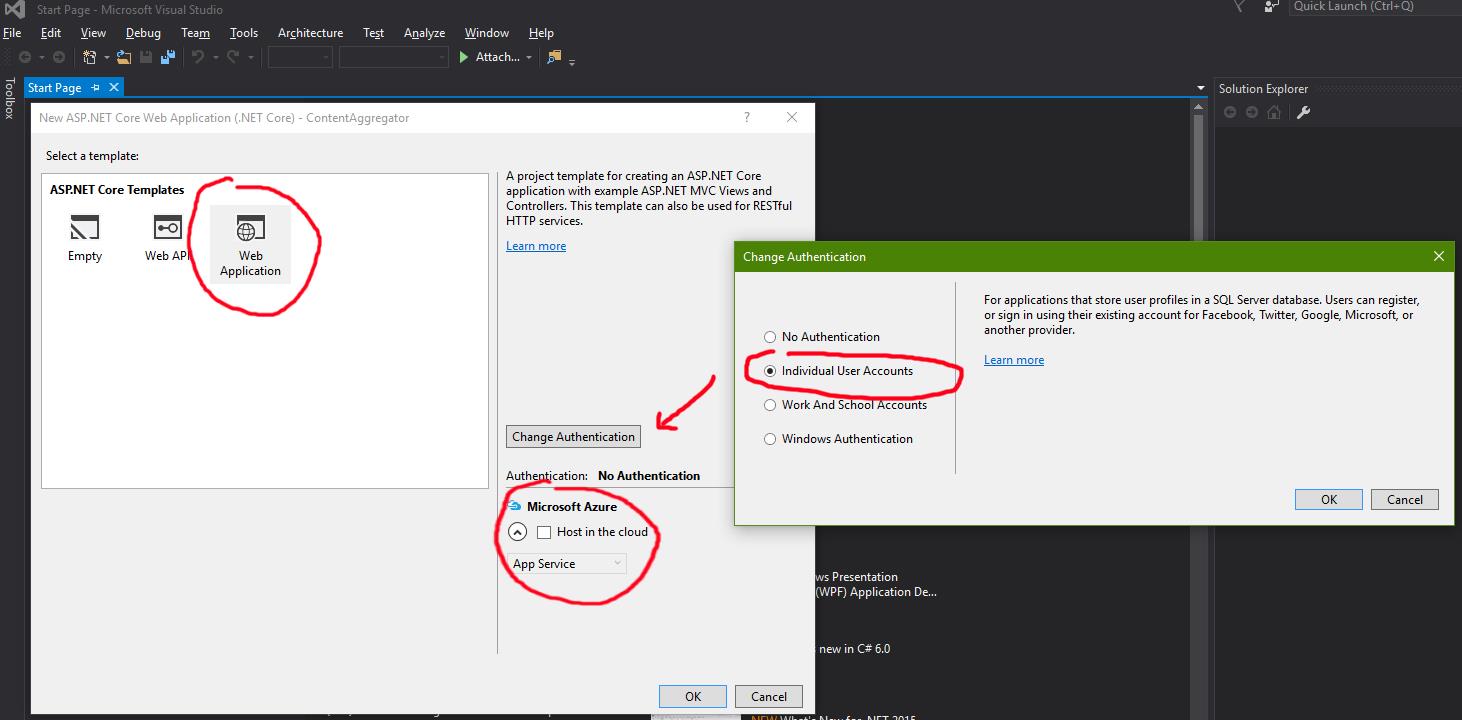

- Setup

- MVC Concepts

- Authentication

- Entity Framework Fundamentals

- Upgrading project to .NET Core 1.1

- Dependency Injection and Services

After I've spent a day of writing working on the application I realized that many of the concepts I take for granted have not been discussed. Consider this part as an introduction to the things *I* know about ASP.Net MVC. :)

Emveesee

The

Model View Controller pattern attempts to separate three different concerns of the application: the flow (Controller), the display and the user interface (View) and the various data objects that are passed, validated and manipulated (Model), which are also responsible for the logic and rules of the application. In ASP.Net, MVC means:

- the models are POCOs, for which validation constraints, display options and other aspects of how they are intended to be used are expressed with attributes decorating the classes or their properties.

- the controllers are classes inheriting from Controller, their names ending with "Controller". Their methods are called controller actions and represent endpoints for HTTP calls. Attributes are again used to configure these actions, like if they need to be accessed by POST or PUT. The responsibility of controllers is to... well... control the action in the application.

- the views are files with the .cshtml extension. They are found in the Views folder and the convention is that the view for a controller action is found in /Views/ControllerName(without "Controller")/ActionName. While I guess someone could hack MVC to use the old ASP.Net engine, the preferred engine for views is Razor (the one with the mustaches). The direction of the ASP.Net MVC views and templates is fine control over the generated markup, as opposed to the old ASP.Net way of encapsulating everything in server side user controls. The preferred way to encapsulate control behavior is now client side, with frameworks helping with it like AngularJS and ReactJS.

Add to this services and middleware. Services are encapsulation of logic. For example a service may determine aspects of flow of data from the controller to the view or validate the values of a model class. While these two services would be part of the Controller and Model parts, respectively, in code they are pieces of code, usually implementations of interfaces - for testing and dependency injection reasons - that determine their purpose.

Middleware are components - they can be composed - that react to what happens in the HTTP stream (requests and responses). Most of what MVC does internally depends on middleware and services.

MVC controversies

The ugly truth is that MVC has been around for 30 years and people implement it differently every time. Because it spans a large domain (it handles everything, basically) the vague wording used to describe the pattern causes a lot of confusion. Why did Microsoft choose MVC for their new version of ASP.Net? Well, first of all because their first attempt - ASP.Net Forms, which tried to bring the desktop application development style to the web - failed miserably. Second, because at the time they were making the decision,

Ruby on Rails was the coolest thing since man discovered fire. I mean, it made a shitty programming language like Ruby look useful! (Just kidding, irate Ruby developer. Was trying to see if you're paying attention). People are still fighting over the question if the model is supposed to handle the application logic or the controller or even a separate part (services?).

My own interpretation is that models are mostly data classes. They should have code that handles their own internal state, but nothing else. The controller controls the flow of the data, meaning that if the user accesses an action, the method will direct execution towards the correct component handling that action. Therefore, for me, application logic is neither in the controller or the models.

Imagine a family: the wife tells the husband "go to the market and buy 10 eggs and some tomatoes!". Wifey is the user, the husband is the controller. He understands the intent of the user and directs execution towards its implementation. Now, the husband could go to the market and buy the eggs himself, but that would be bad form (heh heh), so he goes to his two sons and tells them "Frank and Joe, go to the market! Frank, get me some eggs, 10 of them. Joe, get me some tomatoes for a salad. Now, git!" (get it? git? I am on a roll). At this point the sons are confused: are they Model or are they Controller? Meanwhile the eggs and tomatoes are clearly part of the model. An egg may spoil, for example, and that is probably the responsibility of the egg. You may consider that the market basket containing eggs and tomatoes is the model, conveniently leaving aside the functionality when the user sees the quality of the purchases and chastises the poor controller for it.

Certainly, ASP.Net MVC leans towards my interpretation of things. Classes in the Models folder in the default application are just classes with decorated properties and the piece of code that interprets the attributes and their values, that binds parameters to properties, that is a service. The code that does stuff, after the controller determined it's OK to be executed, are again managers and services. For example there are sign-in and user managers in the code, which are implementations from the .Net code itself. If one inlines all of them, it looks to me as if the controller is taking care of the logic of the application, not the model.

Convention over configuration

ASP.Net MVC embraced the

Convention over configuration paradigm. You don't need to hook up controllers anywhere, or define the dependency between views and controllers. A controller for movies will be a class called MoviesController, placed in the Controllers folder and the convention is that every call to its actions would start with

/movies. A view for a List action would be placed in

/Views/Movies/List.cshtml and expected to be called as

http(s)://host:port/movies/list. A typical code would look like this:

public IActionResult List()

{

return View();

}

View is a shorthand for rendering the view of this method by naming convention.

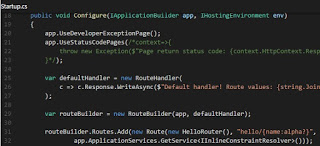

The pipeline for MVC is based on what they call

middleware - what in the old ASP.Net were called handlers, I guess. The main component of an MVC application is its WebHostBuilder, which then uses a class to configure itself, which is usually named Startup. The methods and properties in Startup will be executed/populated using

dependency injection, meaning that parameters will be interfaces: their specific implementation will be determined by ASP.Net MVC based on user configuration, if any.

Same thing applies to action parameters. Values are obtained from the body of the call or from the HTTP GET or POST parameters. An action like

List(int id, string name) will get the correct parameters from a call like

/list?id=1&name=Steven. Based on the

routing (the default one:

{controller=Home}/{action=Index}/{id?} being the one responsible for the most common REST conventions), the same result can be achieved with

/list/1?name=Steven, for example. The values can be retrieved also for a method looking like this:

List(User user), if the User class has Id and Name properties. This is called

model binding and most of the interaction between the browser and the .NET code at the backend will be done through it.

Extension methods: dependency injection and middleware

The pattern of configuration for your application is to have it built by fluent methods, using the so called

builder pattern. You start with the WebHostBuilder, for example, then you .UseKestrel, .UseIISIntegration, .UseStartup, etc. The default template code looks like this:

var host = new WebHostBuilder()

.UseKestrel()

.UseContentRoot(Directory.GetCurrentDirectory())

.UseIISIntegration()

.UseStartup<Startup>()

.Build();

host.Run();

These methods are extension methods, their complex functionality hidden behind this simple pattern of use. Check out the simple

.AddMvc() method, how it deceptively covers so much complexity. And in the source code, other extension methods, each with their own complexity, but eventually leading to either injecting some dependency or configuring and adding middleware to the MVC pipeline. It seems to me that dependency injection methods start with

Add, while the middleware inserting methods start with

Use.

As an example, let's take one of the lines inside .AddMvc():

builder.AddRazorViewEngine();. Following one of the many branches defined by this extension pattern (I am still not sure how much I like it) we get to

MvcRazorMvcCoreBuilderExtensions.AddRazorViewEngineServices, which injects a lot of dependencies. Take a look at

// This caches compilation related details that are valid across the lifetime of the application.

services.TryAddSingleton<ICompilationService, DefaultRoslynCompilationService>();

. One can change the implementation of the compilation service! Alternately, let's look at

.UseStaticFiles(). It's a wrapper over

app.UseMiddleware<StaticFileMiddleware>();

Open source .NET Core

As you've seen from the examples above, I've often linked to the source code of methods and classes. That is because finally Microsoft decided to open source the .NET Core code and thus both let people find and solve their problems and allow developers to find where pesky hard to explain bugs are coming from. The extension method pattern is making difficult to explore what is going on, as you have to switch from one project to another on the GitHub interface (or your own file system, depending on how you decide to work). Dependency injection makes it even harder, as you have to first find the interface responsible for your current programming task, then find all implementations and what injected them in the first place. I tried to find some decent exploring tool, but found none and I am too busy to make one of my own. Homework? :)

Even so, it is a great boon that one can look into the innards of the Microsoft code. It not only helps pinpoint issues, but also teaches about how one of the biggest software companies writes code. I don't want to dissect middleware in this post, but I strongly suggest you take a look at how they are made and how they are working. Whenever I find it's useful, I will mention the middleware responsible with what I am discussing, so try to make an effort to look its source code and see what it actually does.

Attributes

Attributes are used all over ASP.Net MVC. They tell what HTTP method to accept for controller actions, how to authorize access, how to validate models, how to bind the incoming parameters to models. Here is en example:

//The user needs to be authorized to access this method

[Authorize]

//only POST requests

[HttpPost]

// over HTTPS are accepted

[RequireHttps]

//The URL for this method will be /util not /Hardcore

[ActionName("util")]

//controller method

public IActionResult Hardcore([FromBody] /*the data will be taken from the body of the request*/ HardcoreData data)

{

//only show the view if the model is valid

if (ModelState.IsValid) return View();

//otherwise return a bad request

return BadRequest(ModelState);

}

public class HardcoreData

{

// value needs to be set (not null)

[Required]

// the format of Id needs to be a URL

[DataType(DataType.Url,ErrorMessage = "The Id need to be a URL")]

// shorter or equal than 500 characters

[StringLength(500,ErrorMessage ="Maximum URL length is 500 characters")]

public string Id { get; set; }

//Range validation from 0 to 100

[Range(0,100,ErrorMessage ="Value needs to be between 0 and 100 and even")]

//Custom validation using the class and method mentioned

[CustomValidation(typeof(HarcoreDataValidator),"ValidateValue")]

public int Value { get; set; }

}

public class HarcoreDataValidator {

public static ValidationResult ValidateValue(int value)

{

return value % 2 == 0

? ValidationResult.Success

: new ValidationResult("Value needs to be even");

}

}

These attributes will be read and used by various services injected at startup. Everything can be changed, so for example you may change the validation system to interpret the RangeAttribute values differently or ignore RequiredAttribute or use custom attributes. Attribute classes only mark the intent of the developer, but do almost nothing themselves.

Models

I've mentioned previously that

models are used to move data back and forth.

Model binding is responsible for taking HTTP requests and turning their parameters into C# classes. Services then use those models, like EntityFramework, or the validation system or the Razor views. You've seen in the previous example how an object may be read from the body of a request. Similarly, they can be read from the HTTP parameters sent to the action method. Read

an example of an investigation to see how various methods of model binding can be used with different attributes.

An important use case for models is

validation. Some of it was demonstrated above. Read more in the documentation. An interesting part of it is the client validation that is implemented out of the box with the right javascript imports and using the right attributes.

Views

In ASP.Net Forms, the code and the presentation were (somewhat) separated into .aspx markup and .cs codebehind. The aspx syntax is probably isomorphic with the Razor syntax and I remember that at one time you could use ASP.Net Forms with Razor. In MVC, views have code in them, using Razor, but they are not strongly coupled with a specific piece of code. In fact, one can reuse a view for multiple models, especially the partial ones - which take over from UserControls, I guess. So in fact there is quite an overlap between ASP.Net Forms and MVC, if you add a separate injection mechanism to Forms in order to decouple markup and codebehind.

For

views, the biggest difference as far as I am concerned is the encapsulation of reusable content, what before were controls. Panels, Grids, UserControls, all of them inherited from a Control class that handled the various ASP.Net phases of its lifecycle. There was no job interview in which you weren't asked about the ASP.Net lifecycle and now it's irrelevant. Nowadays, you render things from the markup up, with focus on the client side.

HTML helpers and

Tag Helpers are what allows you to encapsulate some rendering logic.

What caused this switch to a new paradigm? Well, I would say HTML5 and javascript frameworks. You would have a wonderful Grid control rendering a nice table layout and the developer would shout foul because he wants everything with DIVs. You would have a nice Calendar extension control and the dev would dismiss it immediately because he wasn't to use the latest jQueryUI client side calendar. Most of all, it would be because the web designer would use Microsoft agnostic tools that create pure HTML and then the poor dev would have to reverse engineer that in order to get the same layout with default controls. Today a grid is only a DIV, a Razor @foreach and a template for the rows using the values of the items displayed. Certainly all of this can be encapsulated further into your own library of HTML helpers, but you would have complete control over it.

In ASP.Net MVC Core partial views will be superseded by

View Components. If you thought the Microsoft interpretation of MVC was a little vague, this will make your head explode. View Components are most similar to controllers, only you can't call them directly via HTTP, are not part of the controller lifecycle and can't use filters. They have views associated with them in

/Views/ControllerName or Shared/Components/ViewComponentName/ViewName. You may invoke them directly from a controller or from a view, using the wonderfully ridiculous syntax

@Component.InvokeAsync("Name of view component", <anonymous type containing parameters>)If not specified by the user, views are discovered by their location in the project. Specifying the view means specifying the exact path of the cshtml file, an ugly and not recommended solution. Otherwise, when you just

return View();, MVC looks in /Views/ControllerName/ViewName.cshtml and then in /Views/Shared/ViewName.cshtml. As we are accustomed, this default behavior can be changed by implementing a different

IViewLocationExpander.

You may specify a model type for a view, which helps a lot with Intellisense. Otherwise, you may render server side data using the viewbag ViewData or you may use the @model keyword, which allows dynamic use of properties, but doesn't help much with Intellisense. Using the wrong property name will generate runtime errors.

Needless to say, before I actually go into the code, views are a bit of a mystery to me as well. They also clash a bit with the architecture of an application that makes most sense to me: API + client side code. I feel I need to discuss this, so...

Types of MVC application architecture

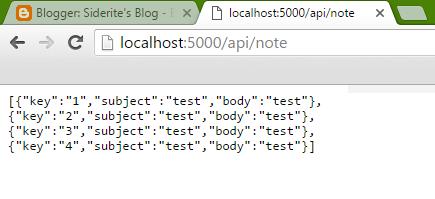

In .NET proper ASP.Net MVC and ASP.Net Web API were two different things, with huge overlap of functionality. In .NET Core, they are the same thing, gathered under the umbrella of MVC. A controller action can receive AJAX calls in JSON format and return properly formatted HTML5 markup, for example. It is very difficult to find a reasonable way to separate the two concepts in .NET. However, there are two major ways of using them, separating them by use, as it were. The MVC application that uses controllers and views is still a product of turning ASP.Net Forms into a Ruby on Rails clone. While the overall architecture of the application has changed, giving more control to the developers, it also constrains them into a type of functional architecture that may be - frankly - obsolete already.

There are three types of architectures that I will discuss for a very simple application that displays news items using their title, description, url and image:

- starting from an ASP.Net Forms page and a list of NewsItem objects in C#, we use an .aspx page that contains a Grid control. We define the way the title is rendered as a link, the description as a short text block and the image as a side thumbnail.

- starting from an ASP.Net MVC controller and a list of NewsItem objects in C#, we render a view which uses a Razor @foreach to display sections with a title link, a description and a thumbnail.

- starting from an HTML page, we fire an AJAX call to a .NET API that returns a Javascript array of NewsItem objects, that then we render as sections with a title link, a description and a thumbnail, maybe by using an MVC client-side library like AngularJS.

See what I mean? The first two versions are basically the same. Whether the mechanism for rendering comes from a Forms Control or from a Razor loop is irrelevant to the overall design of the app. The third, though, presents some very interesting ideas:

- The website is not a .NET website. It's pure HTML. It can be served from any type of server, on any platform, can be created with any tools.

- There is no visual interface on the actual .NET server side. It's a simple API that sends and receives data in serialized form.

- The MVC architecture moves towards the client side, where views, models and controllers are just Javascript code, HTML and CSS.

- There is a very clear functional separation of concerns. There is server side development: C#, serialization and persistence of data, sensitive or resource intensive processing, the good ole things that .NET developers love. And there is the client side development: HTML, Javascript, CSS, responsive design, native mobile apps and all that crap that designers and frontend developers do.

(Again, joking, dear frontend or mobile developer! Just making sure you weren't asleep)

The conclusions are staggering, actually. With no concern for presentation, the server side API framework can be incredibly tiny. Efforts can be turned towards making it efficient, fast, secure, using less resources, being scalable. There is no need for a Razor engine, HTML helpers, partial views, View Components, no one cares about them. Instead what it enables is working with any kind of client side user interface. Mobile native apps from all platforms, multiple web sites, other APIs, they all could just attach to the API and present functionality. Meanwhile, the client side interface developer is exempt of all the dependencies on Microsoft tools, of concerns over how many servers they are, where they are located, the general background functionality of the application.

I've worked in such a way, it was great! People working on iOS, Android and web applications would just come to me and ask for an API that does this and that. After days of fighting over how the API signature should look :) everyone would just do what they are good at. Even more, because we were so different, bugs were easier to discover when we tried to connect our work over this simple interface.

The downsides are blessings in disguise. As an API call needs to finish quickly and return small quantities of data, the developer is forced to consider from the get go things like: pagination, chunking, asynchronous programming, concurrency, etc. Instead of importing a list of URLs for the news and then waiting for the output of the page while the server side is spidering the data, the app needs to show "importing data, please wait" and then periodically query the API if the import is finished. When hundreds of people try to do this, there is no problem, as the list of links to spider just grows and the same process extracts the data. If two users import the same links, they only get spidered once.

Even if the application that we will be working on is not based on this design, consider from the beginning if you even need to use ASP.Net in an MVC way. The world is moving away from "applications" and towards "services". Perhaps the API itself would only be a front that accesses other APIs in the background, microservices that are optimized perfectly for the tiny bit they perform.

Data Access Architecture

A small rant against ORMs

Just like with the overall design, one may use different ways of accessing data. The ASP.Net MVC guide for

working with data suggests a single clear path: the Microsoft ORM Entity Framework. As I am still to use it in any serious capacity, I will not explain EF concepts here. I will ask you a question instead: do you even need an ORM?

Object Relational Mappers are tools that abstract the database from the viewpoint of a developer. They work with contexts and sets and strongly types objects and have great Intellisense support. Started as a way to map an existing database to a .NET data framework, Entity Framework now goes the other direction: code first! You start writing your app, using Entity Framework just like you would already have everything you need and it creates and maintains the database in the background. Switching from SQL Server to PostgreSQL or SQLite or even a custom data persistence method is a breeze. They sound great!

However, if you already know what persistence model you use and are proficient in designing and optimizing the data structure there, using an ORM starts to lose its appeal. Just as with ASP.Net Forms, you have no control over the way the ORM chooses to communicate with the database. It may do better than you or it may do horribly bad. You start developing your app, everything works fine, you add feature after feature and when you finally load the actual real life data something goes wrong and you have no idea what and where.

There already are patterns of abstracting the data access and usually it involves using the data from a separate library (or service) that encapsulates the desired behavior and is structured by intention. Why would I get all NewsItems, when there are millions of them I and in no situation I can conceive would I need all of them? Why would I get a NewsItem by Id, when the Id means nothing to me and things like the URL are more relevant? Why would I choose to store in memory all the items I want to delete, when my condition for them to disappear is a simple WHERE condition?

OK, OK, I know that if you worked with Entity Framework you have a lot of (good) answers to all of these questions. Yet my original question still needs to be considered before you embark on your development journey: do you even need Entity Framework?

The main disadvantages I see for Entity Framework specifically are:

- It diffuses the API for working with data. Instead of writing a NewsItemManager class that gives you items by url and by date, for example, the developer is tempted to write custom queries inside the logic of the application. This leads to difficulties refactoring the code or redesigning the application.

- It hides the complexity of the database. Instead of working with the actual stored data, you work with an abstraction that may look good to you, but hides problems that you are tempted to ignore.

- It forces switches of competencies. If you want to debug and optimize your data access you now need an Entity Framework expert, rather than a database expert.

- It causes technical debt that you are not even aware of. From this list, I believe this to be the most insidious. There is a chance, that may be very small, that your application needs a functionality that Entity Framework was not designed for. EF works great in any other area except that one. And when you try to fix it, you have several options that are all horrible: create a separate system for it, hack Entity Framework into submission, leave it slow and bad because everything works so well otherwise. At this point, when you notice there is a problem, it's already too late

In our application I will gladly use Entity Framework. It seems some of the basic functionality of MVC, like identity, are strongly designed to work together with the Entity Framework data abstraction. Yet even so, I will try to abstract the data layer - mostly because I have no need to implement it for this demo. This will probably lead to an interesting consequence: the default MVC modules will use EF in a way, while I will use it for my application in another way.

Entity Framework concepts

An actual advantage of EF that I think is great is the concept of

migrations. EF is able to save modifications to the data layer as C# code files that can be added to source control. This helps a lot when working in teams of multiple people.

As an aside, I was working for a project that used stored procedures to access the database. The data access layer was getting and changing data using these functions and procedures that were saved in a folder as .SQL create files. It was easy during deployment to delete all procedures and functions and then recreate them, but how about database schema or data changes? For this we used a folder of .SQL changes. For each file we needed to create also a rollback file, to "fix" whatever this was doing. They were difficult to manage at first, but after a while you got the hang of it. I wonder if Entity Framework allows for this kind of workflow. That would be great. Aside over.

The root of an EF model seems to be

the context. Inheriting from DbContext (or as in the default template app from IdentityDbContext<ApplicationUser>, coupling it inexorably with the identity of the user), this class need not have a lot of own code at first. As time goes by, one point changes to the data mechanism are probably hooked here. The DbContext will have properties of type

DbSet<SomeEntity> which will be used to queries said entities. A simple

services.AddDbContext<MyDbContext>() in the startup class declares your willingness to work with a context or another.

A mix of conventions and attributes defines the mapping between your context and the underlying database. A good link to explore this can be found

here.

Another interesting quality of Entity Framework is that you can use it in memory, very useful with automated testing. Here is

a link that explains it.

Using

LInQ to Entities and the DbSet properties of the context, one can create, read, update and delete records, but there are some differences from what you may be used to. The delete or update operations by default need to first retrieve the items, then alter them. A good intro to the changes in Entity Framework 7 can be found

here.

The pattern used by Entity Framework is called "unit of work". If you want to go down the rabbit hole, look it up. A nice article about it and some possible improvements can be found

here.

An interesting reason for using Entity Framework would be for when you don't have a lot of control over your persistence medium. I haven't worked with "the cloud" yet, but basically they give you some services and tax you for using them. If EF can abstract that away and minimize cost, it would be a boon, but I have no information about this.

Miscelaneous

The post cannot be complete without some concepts like:

In this series I will not go into details for many of them, so read the info the .NET team has prepared for each subject.

Leaving so soon?

The next post will be about authentication, more exploratory and with code examples.

Learning ASP.Net MVC series:

Learning ASP.Net MVC series: